I recently launched a small hobby website that aggregates documents and papers posted to a popular tech news website. Some of the feedback I received after the launch included suggestions to categorize the aggregated documents. It seemed like a nice, small exercise in document categorization, and I decided to take a shot using the data I had on hand, with the objective being to determine the category for a document from just the title text.

For starters, I limited the dataset to arXiv.org submissions, and used the categories associated with each document as ground truth labels. After playing around with the data, I realized that I would need an expanded dataset if I wanted to train useful models that could differentiate between a variety of subjects beyond just those related to science and technology, such as business, economics, games, news, and politics.

Enter the Mechanical Turks

In order to get my hands on a quality dataset with document labels for the categories I wanted, I turned to Amazon Mechanical Turk. I had prior experience with Amazon Mechanical Turk from using it shortly after its initial launch, playing around as a worker and earning pennies per task by solving unsophisticated CAPTCHA puzzles or examining satellite imagery to look for famed computer scientist and missing person Jim Gray.

After signing in as a requester and setting up my project, I was struck by how outdated the entire Amazon Mechanical Turk website appeared. Upon creation of a project and submission of a batch of tasks, simply viewing the progress of the tasks and downloading the ongoing results is a very clunky experience. The modal dialogs feel like they’re stuck in 2007, and look out of place when compared to the user interfaces of modern AWS services. However, the lackluster user experience as a requester was nothing compared to the anger I would soon face from other users as I started reviewing the results rolling in.

Big Bad Data

Document categorization is a fairly commonplace project by Amazon Mechanical Turk standards; the project creation page even has a built-in template that makes the setup for this class of projects fairly straightforward. A requester has the option of sending the same task (i.e. provide a label for a given document) to multiple workers, as to triangulate on the most appropriate answer in ambiguous or unclear cases.

After some manual inspection of a sample of the unlabeled dataset, these were chosen as the target categories:

- Business and Economics

- Computers and Technology

- Games and Hobbies

- Lifestyle

- Math and Science

- News, Politics, and Government

As I examined the results that came in after I submitted my first batch of requests, I was surprised by the poor quality of data for what should be a fairly straightforward task. Some of the examples were extreme, such as political documents or court case briefings getting labeled as “Games and Hobbies”. In fact, the most egregious mislabeled examples I found were all tagged with that label, as I came across several cases of technical papers, scientific journal submissions, and corporate earnings releases all miscategorized as such.

As a machine learning practitioner, the obvious thing to do was to reject the mislabeled data. A mislabeled document introduces noise to the model training process, and is particularly troubling in contexts involving a limited number of examples or features. Thus, my first inclination was to reject all responses that were not unanimous - even if two workers agreed on a label and a third worker provided a different label, all three submissions would be rejected. However, I decided that such a policy would be too harsh, and wrote some custom code to instead only reject submissions for documents that had no majority answer; that is, when all three responses were of different labels.

However, that meant that some babies would be thrown out with the bath water - as some appropriately labeled responses would be rejected along with the bad one. I did not see any other option; the whole point of using Amazon Mechanical Turk was to outsource the document labeling, and not have to manually inspect the outlier submissions and determine which ones were “right” or “wrong”.

Pitchforks

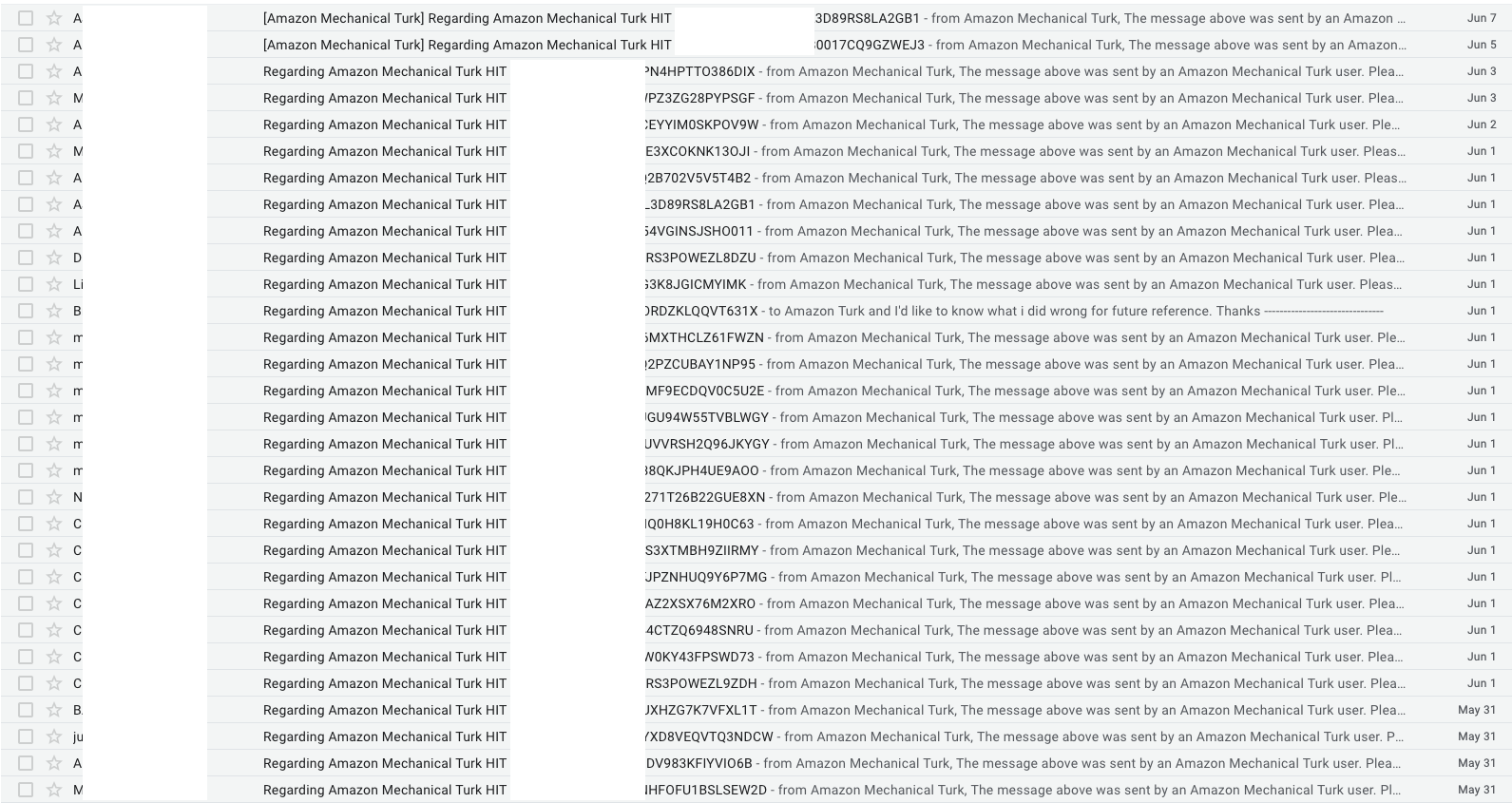

As soon as I submitted the reviews of the first batch of results, the angry feedback started flowing in. I received dozens of messages from workers: some were sincere apologies imploring for me to reconsider the rejection in order for the worker to retain their worker rating; others were disgruntled rants about how the rejection was unjust and a demand for correction.

Not only was I surprised by the amount of anger and frustration from these workers over tasks that paid only a penny each, but I felt that I had my hands tied as there was no other alternative. If I had not rejected submissions for documents that had no majority answer, I would’ve been left with unusable examples for a large fraction of my dataset. As a hobbyist, I can let it slide as there is no academic or business pressure to wring out all available value from the data. In fact, to avoid any further backlash, I ended up approving all submissions in the second batch, and decided to write off the poor dataset as a loss and forego the experiment altogether.

After this poor experience, I find it hard to see how real research projects using Amazon Mechanical Turk can deal with this level of data quality while managing the need to “appease” the workers creating these datasets and compensating them appropriately. Perhaps that is the reason many companies and researchers are turning to semi-supervised learning techniques and training models to generate labeled datasets or embeddings to be used by other models. It is a direction that could’ve been explored for this project; perhaps some off-the-shelf or well-known approach can be used in order to build topics from the comment thread text for each submission. At the very least, the semi-supervised models won’t get all up in arms about your treatment of their low accuracy results, and demand that you give pennies where pennies are due.

Data

A modified version of the resulting dataset is available on GitHub. The dataset includes the URLs for 2557 documents along with the labels tagged by the workers, with all of the Amazon Mechanical Turk metadata removed.

Comments